Deepfakes are manipulated images or videos created using Machine Learning (ML) and artificial intelligence (AI) algorithms to replace someone’s face or voice with another person’s, creating a new identity.

Basically, deepfake technology utilizes deep learning algorithms that can learn how to solve problems by analyzing large amounts of data to make alterations. The algorithms enable the creation of synthetic media that can mimic a person’s appearance and voice with remarkable accuracy.

One of the most common methods for creating deepfakes involves leveraging deep neural networks and autoencoders. These can examine a target video and a series of video clips featuring an individual. The result is a believable video that displays that person within the target video.

How are Deepfakes Generated?

At the heart of this process are techniques like Generative Adversarial Networks (GANs) and autoencoders, which allow computers to learn and replicate the intricate details of human faces and movements. This introduction delves into the fundamental steps behind the creation of deepfakes, providing a glimpse into the intricate dance of data collection, model training, face swapping, and post-processing that brings these convincing digital fabrications to life. Understanding how deepfakes work is essential in navigating the opportunities and challenges they present in our increasingly digital world.

The process of generating deepfakes involves several steps and technologies, most notably machine learning and neural networks.

Here’s an overview of how deepfakes are generated:

Data Collection:

- Source and Target Media: Obtain extensive video footage or images of both the source person (the person to be replaced) and the target person (the person who will replace the source).

- Training Data: Collect numerous images or video frames of the target person from various angles and lighting conditions.

Preprocessing:

- Face Detection and Alignment: Use face detection algorithms to locate faces in the images or video frames. Align the faces to a standard pose to ensure consistency during training.

Model Training:

- Generative Adversarial Networks (GANs): The core technology behind deepfakes is often GANs, which consist of two neural networks: a generator and a discriminator.

- Generator: Creates fake images intended to look like the target person.

- Discriminator: Evaluates the generated images against real images to determine authenticity.

- These networks are trained together in a process where the generator improves its output based on feedback from the discriminator until the generated images are indistinguishable from real ones.

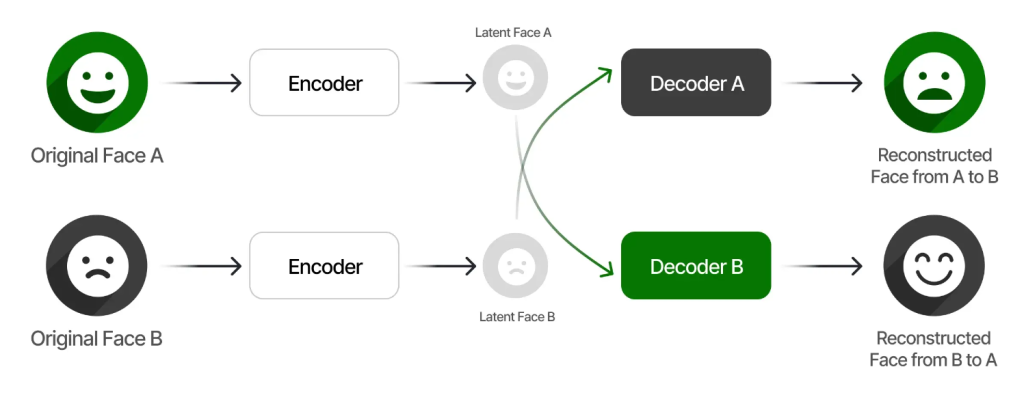

- Autoencoders: Another approach uses autoencoders, which are neural networks trained to compress and decompress images. A shared encoder learns to map both the source and target images to a latent space, while separate decoders map the latent representation to the source and target images, respectively.

- Encoder: Compresses the input image into a lower-dimensional representation.

- Decoder: Reconstructs the image from the compressed representation.

Face Swapping:

- DeepFake Algorithm: Use the trained models to perform face swapping. The generator creates frames of the target person’s face that match the expressions and movements of the source person’s face in the original video.

- Seamless Integration: Post-processing techniques like blending and color correction are applied to ensure the swapped face looks natural in the video. This may involve using tools like face warping and feathering the edges of the face region.

Post-Processing:

- Lip Syncing: Ensure that the target person’s lip movements match the source person’s speech.

- Audio Syncing: Adjust the audio track to align with the modified video if necessary.

- Refinement: Apply additional filters and adjustments to improve the overall realism and coherence of the final video.

The Risks and Dangers of Deepfake

The rise of deepfake technology has brought significant risks and dangers to our digital landscape. Deepfakes can be used to:

- Spread disinformation and propaganda

- Manipulate political discourse

- Damage reputations.

Moreover, deepfakes can be used for malicious purposes like blackmail and fraud. For instance, a deepfake video can be used to impersonate someone and extort money or sensitive information.

The risks of deepfakes also extend to the realm of security and privacy. Specifically, facial liveness verification, a feature of facial recognition technology that relies on computer vision to verify the presence of a live user, is highly vulnerable to deepfake-based attacks.

Such attacks can compromise the security of user data and lead to significant security concerns for users and applications.

There are three major types of deepfakes implications:

- Political Implications of Deepfakes

- Social Implications of Deepfakes

- Economic Implications of Deepfakes

Political Implications of Deepfakes

The potential of deepfakes in a political context could harm democratic processes or otherwise disrupt government policy and sow discord among citizens.

The ability to create convincing fake videos of political leaders can lead to confusion, disinformation, and a loss of trust in public institutions. In addition, such forms of disinformation could manipulate and distort the complicated media ecosystem.

While legislation to ban political deepfakes would require significant carve-outs to protect freedom of expression, social media platforms can still limit or prohibit their use.

Fortunately, workable solutions are emerging from academic researchers and established technology companies such as Adobe and Microsoft.

The Coalition for Content Provenance and Authenticity created open, royalty-free technical standards to combat disinformation.

However, it is essential to have digital media literacy because deepfakes can be challenging to identify. It is necessary to remain vigilant about verifying the authenticity of online media.

Social Implications of Deepfakes

Social media platforms’ domination of our online lives has created an ever-present danger of deepfake content spreading unchecked.

Unfortunately, the creators of deepfakes have used this technology to exploit and harm people. For example, cybercriminals use deepfakes to commit identity theft and online fraud, while individuals fall victim to deepfake-enabled scams.

Furthermore, using deepfakes to create fake adult videos and images of public figures and celebrities can tarnish their reputation and dignity.

Scarlett Johansson ‘shocked’ by AI chatbot imitation

Hollywood star Scarlett Johansson has said she was left “shocked” and “angered” after OpenAI launched a chatbot with an “eerily similar” voice to her own.

The actress said she had previously turned down an approach by the company to voice its new chatbot, which reads text aloud to users.

When the new model debuted last week commentators were quick to draw comparisons between the chatbot’s “Sky” voice and Johansson’s in the 2013 film “Her”.

OpenAI said on Monday that it would remove the voice, but insisted that it was not meant to be an “imitation” of the star.

However, Johansson accused the company, and its founder Sam Altman, of deliberately copying her voice, in a statement seen by the BBC on Monday evening.

“When I heard the released demo, I was shocked, angered and in disbelief that Mr Altman would pursue a voice that sounded so eerily similar to mine,” she wrote.

“Mr Altman even insinuated that the similarity was intentional, tweeting a single word ‘her’ – a reference to the film in which I voiced a chat system, Samantha, who forms an intimate relationship with a human.”

Set in 2013 film Her sees Joaquin Phoenix fall in love with his device’s operating system, which is voiced by Ms Johansson.

Economic Implications of Deepfakes

In today’s information-based economy, deepfakes can cause severe damage to businesses and economies.

One of the most significant economic impacts is the potential for market manipulation. Deepfakes can create false or misleading information about a company, leading to changes in stock prices and decisions that benefit the creators of the deepfakes.

Furthermore, they can manipulate financial data, making incorrect predictions and investment decisions. This can ripple effect throughout the economy, as inaccurate financial data can lead to wrong assessments of market trends and risks.

Additionally, deepfakes can harm the reputation of businesses and individuals, resulting in lost revenue and opportunities. The spread of deepfake videos and images can cause negative publicity, mistrust, and loss of credibility, which can be challenging to recover from.

As a result, businesses and governments are exploring ways to prevent their malicious use.

One approach involves using AI and ML algorithms to detect and combat deepfakes, while blockchain technology can provide a secure and immutable data record to prevent its manipulation.

Conclusion

As deepfake technology advances, the risks of digital deception grow more significant, necessitating reliable methods to authenticate data and protect its integrity.

At AInexxo, we guarantee data integrity and quality assurance with certified proof both pre- and post-processing in AI, whether in open or closed connectivity environments.

Our commitment ensures organizations confidently leverage AI technologies, maintaining transparency and reliability while safeguarding against manipulation. This approach promotes ethical and trustworthy AI applications across diverse industry sectors.