What is Artificial Intelligence Act?

The Artificial Intelligence Act by the European Union marks a major move toward creating a full-fledged regulatory framework to govern the development, implementation, and use of AI technologies within its member states, and this effort forms the bedrock of the EU’s broad digital strategy. Because it seeks to ensure that AI applications meet safety standards and respect European values and laws, including privacy, equality, and human oversight, the Act plays a crucial role. But it doesn’t stop there; it also aims to foster innovation and economic growth, so the implications of this Act extend far beyond regulatory compliance, promising a safer and more innovative future for AI in Europe.

Timeline for the Full Implementation of the AI Act

The European Parliament and the Council will formally endorse the AI Act, setting it to take effect on the 20th day after its publication in the Official Journal of the European Union. The Act’s provisions will be fully applicable 24 months after it comes into effect, following a gradual implementation approach:

- EU Member States must start phasing out prohibited AI systems within 6 months of the Act’s activation;

- The enforcement of regulatory responsibilities for the governance of general-purpose AI systems will occur by the 12-month mark;

- The entire range of the AI Act’s regulations, including requirements for high-risk AI systems as listed in Annex III (catalogue of high-risk use cases), will become operational at the 24-month threshold;

- Regulatory obligations for high-risk systems detailed in Annex II (compilation of Union harmonization legislation) will be enforced after 36 months.

What are the risk levels in the EU AI Act?

The Act adopts a risk-based approach, categorizing AI systems into four levels: Unacceptable risk, High risk, Limited risk, and Minimal or no risk. Based on the level of risk, each category has corresponding regulatory requirements to ensure that the level of oversight is appropriate to the risk level. The EU AI Act aims to ensure the safe and responsible development of AI systems in the EU.

Unacceptable risk

Unacceptable risk is the highest level of risk. This tier can be divided into eight (initially four) AI application types that are incompatible with EU values and fundamental rights. These are applications related to:

- Subliminal manipulation

- Exploitation of the vulnerabilities of persons resulting in harmful behavior

- Biometric categorization of persons based on sensitive characteristics

- General purpose social scoring

- Real-time remote biometric identification (in public spaces)

- Assessing the emotional state of a person

- Predictive policing

- Scraping facial images

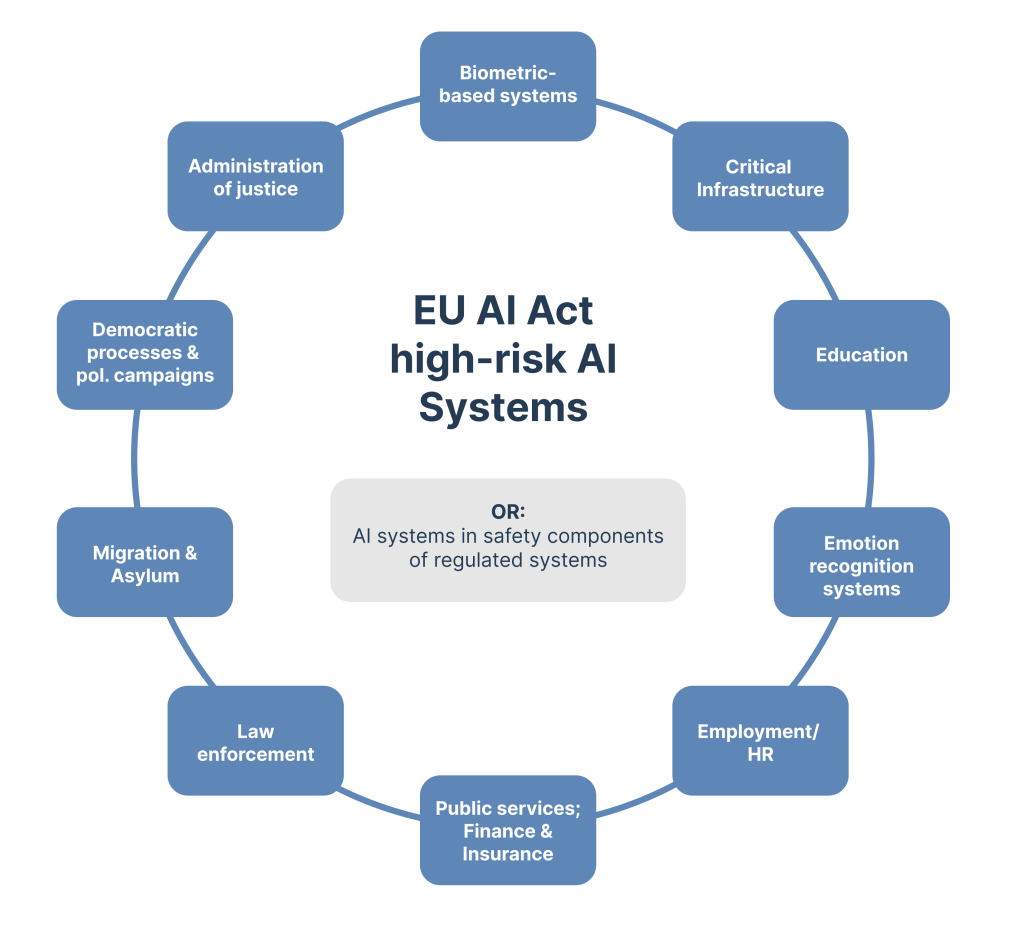

High risk

The draft EU AI Act classifies AI systems that threaten human safety or fundamental rights as high risk. This classification extends to AI systems in toys, aviation, cars, medical devices, and elevators – all of which are products covered by the EU’s product safety regulations.

Furthermore, the Act recognizes AI systems in critical infrastructures, such as transport systems, as high risk if their failure could endanger lives. Beyond physical safety, the legislation aims to safeguard human rights and quality of life. For example, AI used for scoring exams or sorting resumes is deemed high risk due to its potential impact on individuals’ career paths and futures.

The Act also identifies various law enforcement activities as high risk. These include assessing the reliability of evidence, verifying travel documents at immigration control, and conducting remote biometric identification, given their significant implications for individuals’ rights and freedoms.

Limited and minimal risk

This level targets AI systems with specific transparency needs, like using a chatbot for customer service. Your users have to be aware that they’re interacting with a machine and be given the opportunity to opt out and speak to a human instead. Limited risk systems rely on transparency and informed consent of the user, as well as giving them the easy option to withdraw.

The lowest level of risk designated in the AI Act is referred to as minimal risk, and it encompasses applications such as AI-powered video games or email spam filters.

The Influence on AI Development

The AI Act seeks to boost safe, ethical AI innovation through clear rules for creators. Yet, critics worry its tight rules, especially for high-risk AI without third-party checks, could hinder innovation.

- Economic Implications: The Act’s introduction of a unified regulatory landscape is expected to boost investor confidence and stimulate growth within the AI industry. Yet, the financial burden of compliance and the need for operational adjustments present significant challenges. Small and medium-sized enterprises (SMEs), in particular, may struggle with these new requirements due to their limited resources compared to larger companies.

- Societal Impact: The Act focuses on EU citizen protection, aiming for secure, clear, and fair AI. It bans risky AI practices, like deceptive messaging and social scoring, to build trust in AI technology. Its ethical AI use is a key step in boosting societal well-being and fostering responsible AI innovation.

- Technology Development Impact: The stringent regulations set by the Act, particularly for high-risk AI systems, could inadvertently stifle innovation. Developers may view the regulatory landscape as restrictive, deterring them from experimenting and exploring new AI capabilities. This environment could decelerate technological progress and the introduction of innovative AI applications.

What is the international dimension of the EU’s approach?

The European Union is working to become a global leader in promoting trustworthy AI internationally through the AI Act and the Coordinated Plan on AI. At the intersection of geopolitics, commercial stakes, and security concerns, AI has emerged as a strategic area of importance.

Countries worldwide are now using AI to show their dedication to tech progress. This is due to AI’s usefulness and potential. As AI regulation starts to form, the EU aims to lead in creating global AI standards. It plans to do this with international partners. This effort will match the rules-based multilateral system and its values.

The EU intends to build stronger ties with key partners. These include Japan, the US, India, Canada, South Korea, Singapore, and the Latin American and Caribbean region. It also aims to work closely with multilateral and regional organizations. Examples are the OECD, G7, G20, and the Council of Europe.

Conclusion

At AInexxo, we follow the AI Act’s rules to ensure ethical, responsible AI development in our sectors. We align with these standards for legal compliance and to offer AI solutions that enhance client efficiency, innovation, and sustainability. Our commitment strengthens our goal to support the industry with safe, effective AI for all businesses.